Data Centre PUE (Power Usage Effectiveness) Explained

If you’ve been shopping for Data Centres in the last several years ‘PUE’ is almost always plastered over the glossy material as a major selling point and something they are very proud of. What is this number and why is it so important?

What is PUE

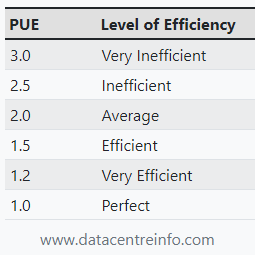

‘PUE’ is short for Power Usage Effectiveness which in a single sentence is a measure of how efficient the data centre is at using power.

1.00 is the ‘perfect’ target which represents 100% of the power going into the data centre is being used to run the ‘IT Equipment’ such as servers within it. This perfect scenario can never technically exist as there are always overheads in running the facilities that host IT Equipment.

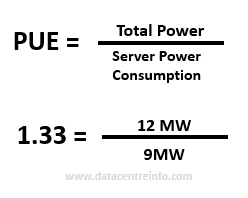

An example of PUE is a data centre which draws 12MW from the local power grid, but the customer’s equipment within it is only drawing 9MW. With these figures we can calculate a PUE of 1.33 which is pretty good and inline with what we would see in the industry in a modern facility. We can also express this as DCiE (Data Centre infrastructure Efficiency) which equates to 75% efficiency, or 25% of the energy being used for overheads.

Common Overheads include:

Cooling

The largest culprit of energy overheads is almost always cooling and can represent over 43% of the total energy consumption in the data centres. When running servers convert almost 100% of the energy they consume into heat and this heat must dealt with somehow, often with the help of extractor fans, pumps, chillers and water cooling towers.

In warmer climates air may be required to be cooled below ambient temperatures requiring additional refrigeration or pre-treatment further increasesing energy consumption compared to somewhere with a more mild climate.

Lighting

Data centres are big, and big places require a lot of lighting which can quickly add up. As servers are not afraid of the dark, many data halls are unlit when no one is around.

Transformer Loss and power conditioning

Powered is delivered to data centres at incredibly high voltage and requires equipment such as transformers, power conditioning equipment and PDU’s to step the voltage down to 100-240VAC which most equipment can run on.

The actual percentage of these losses is heavily dependent on the equipment installed and the voltages they operate at, the publication titled Understanding and Abstracting Total Data Center Power written by electrical infrastructure manufacturer APC estimates these losses to be around 8%.

Uninterruptible Power Supplies (UPS)

One of the expectations of a data centre is 100% or as close to it as humanly possible. This requires sophisticated UPS systems to guarantee a reliable supply of power, consisting of usually static UPS using large battery banks or rotary devices with large flywheels to supply temporary energy until the generators produce power. Most UPS systems have an efficiency of around 94-97%.

For large-scale applications rotary-based UPS supported by diesel generators are quickly becoming a standard efficient and environmentally friendly alternative to traditional battery-based systems which require disposal and replacement every 5-10 years.

Coffee Machines & Human Luxuries!

While a rounding error in the grand scheme of things, maintaining conditions for workers to be comfortable in is expensive! You have lighting, air-conditioning/heating in non-technical spaces, kitchens, meeting rooms and even having the right amount of oxygen in the air costs money

Quite a few data centres, in particular edge locations have switched to a ‘lights out’ philosophy where the facility can run unmanned without the need for staff on-site at all times.

Microsoft took this to the extreme in August 2015 with Project Natick where they experimented with an underwater datacenter concept

History of Data Centres

Data Centres were born around the same time as the first computers during the 1940’s such as the powered ENIAC. Through the 50’s and 60’s as large industries as banks, airlines, universities and government organisations including NASA started purchasing mainframes, data centres were made by organisations, often in air-conditioned corporate office basements.

The first real commercial data centres and uptake started through the dot-com bubble of 1997-2000 when companies started realising the true importance of fast reliable internet connectivity. From there to the present day, new data centres are being built around the world on a daily basis to meet the continuous growth of computing.

Benefits of Improving PUE

A lot has changed since the early days of main-frames, most notably the cost of power which now represents the bulk of ongoing operating costs in a modern data centre.

Inefficient Data centres with higher ‘PUE’ consume significantly more power than more efficient modern Data Centres, this reduces electricity costs and all things equal allows the provider to offer more affordable services.

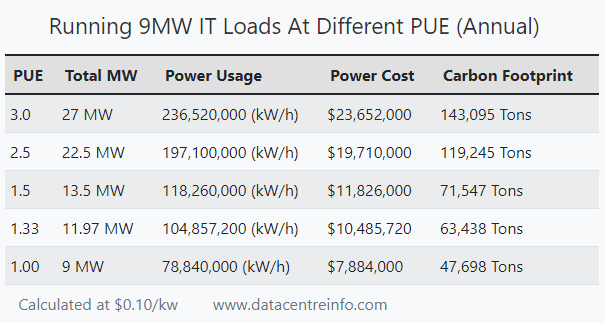

An example of this is a data centre with 9MW of IT Loads in the table below, as PUE increases so does the power usages.

With the ever-increasing cost of energy and the introduction of carbon taxes in the near future cost this difference will only widen and less efficient data centres will be unable to compete.

Strategies for increasing efficiency and decreasing PUE

Every data centre operator is actively looking for ways to increase the efficiency of their facilities. Common ways this is being done include:

- Upgrading cooling systems to more efficient models and replacing older infrastructure which uses CRAC (computer room air conditioner) to RAH (computer room air handling) unit

- Building Data centres in colder climates where ‘Free Cooling’ from cooler ambient outside air can be used

- Setting up Hot and Cold Aisle Containment in data halls so hot and cold air don’t mix

- Advanced airflow and temperature monitoring to reduce hot & cold spots in data halls

- Strict Air-Management policies, ie blanking plates used in empty racks

- Running servers and data halls at higher temperatures, ie 23c instead of 20c

- Upgrading UPS to newer models and designs, including rotary

- Upgrading Transformers and power distribution

- Improve Lighting Efficiency with LED’s and zoned motion activation

Challenges in Achieving Low PUE

While every provider would love to open a window and let some chilly Nordic air into the building to help cool the servers, this is not always possible, especially if you’re in Miami!

Data Centres are intricate machines supporting critical workloads making it very complex to perform major maintenance once in operation. While some incremental upgrades can be done to existing equipment to increase efficiency such as replacing fixed-speed pumps with more efficient variable-speed designs and updating lighting, it is often impractical to completely redesign or replace entire cooling and electrical distribution systems without significant disruption to its occupants.

This leads to data centres stuck in time unable to practically upgrade to meet the newest standards, this is exacerbated by the process of reducing PUE being one of diminishing returns. It might be economical and make business sense to reduce the PUE down from 3.0 to 2.0 by picking the low-hanging fruit with a moderate refresh, going from 2.0 to 1.5 could be cost-prohibitive or easier to start from scratch.

As a result providers tend to build newer next-generation facilities incorporating the latest technologies to get the efficiencies and fault-tolerances expected by the industry.

Data Centres Of the Future

Next generation data centres are incorporating many design features, in particular ‘Free Cooling’ based on using outside air to cool the data centre and collaboration with hardware vendors like Super Micro to make ultra-efficient blade servers which can run as low as 1.06.

Cloud providers including OVH are starting to experiment with Immersion Cooling in their data centres which can reach efficiencies as low as 1.02 with specific use cases and custom hardware.

Currently the official leader for data centre efficiency is Google who claims their data centres are consistently on average around the 1.10 mark with the ‘The Dalles, Oregon (2nd facility)’ dropping as low as 1.06.

In conclusion, Power Usage Effectiveness is an important metric for data centre managers and its customers to consider and can provide a better understanding on how modern its technologies are.

Have you used PUE before when comparing data centres? Let us know your thoughts in the comments below